Operations grimoire/Network

The network is documented on Operations grimoire/Netbox.

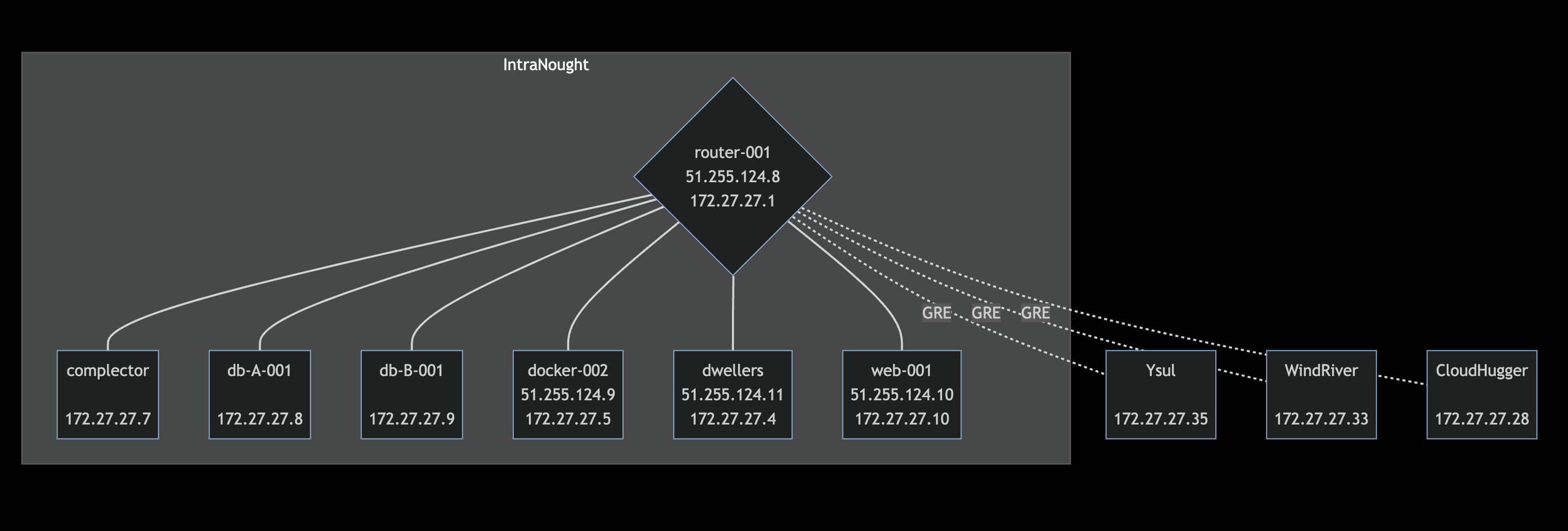

This page reflects a high-level view and is indicative only, representing the state of the network in January 2023.

For the current state of the network, NetBox and not this page is the source of truth of the network state.

Network ranges and topology

172.27.27.0/24

Nasqueron servers are managed through Drake Network private IPs.

This subnet is divided into 16 subnets of 16 addresses.

| Subnet ID | Subnet Address | Host Address Range | Broadcast Address | Subnet Name | Description |

|---|---|---|---|---|---|

| 1 | 172.27.27.0 | 172.27.27.1 - 172.27.27.14 | 172.27.27.15 | IntraNought | VMs hosted on DreadNought hypervisor |

| 2 | 172.27.27.16 | 172.27.27.17 - 172.27.27.30 | 172.27.27.31 | prod.nasqueron.drake | Service mesh in prod (Kubernetes) ✱ |

| 3 | 172.27.27.32 | 172.27.27.33 - 172.27.27.46 | 172.27.27.47 | dev.nasqueron.drake | Development servers ✱ |

| 4 | 172.27.27.48 | 172.27.27.49 - 172.27.27.62 | 172.27.27.63 | free | |

| 5 | 172.27.27.64 | 172.27.27.65 - 172.27.27.78 | 172.27.27.79 | free | |

| 6 | 172.27.27.80 | 172.27.27.81 - 172.27.27.94 | 172.27.27.95 | free | |

| 7 | 172.27.27.96 | 172.27.27.97 - 172.27.27.110 | 172.27.27.111 | free | |

| 8 | 172.27.27.112 | 172.27.27.113 - 172.27.27.126 | 172.27.27.127 | free | |

| 9 | 172.27.27.128 | 172.27.27.129 - 172.27.27.142 | 172.27.27.143 | free | |

| 10 | 172.27.27.144 | 172.27.27.145 - 172.27.27.158 | 172.27.27.159 | free | |

| 11 | 172.27.27.160 | 172.27.27.161 - 172.27.27.174 | 172.27.27.175 | free | |

| 12 | 172.27.27.176 | 172.27.27.177 - 172.27.27.190 | 172.27.27.191 | free | |

| 13 | 172.27.27.192 | 172.27.27.193 - 172.27.27.206 | 172.27.27.207 | free | |

| 14 | 172.27.27.208 | 172.27.27.209 - 172.27.27.222 | 172.27.27.223 | free | |

| 15 | 172.27.27.224 | 172.27.27.225 - 172.27.27.238 | 172.27.27.239 | free | |

| 16 | 172.27.27.240 | 172.27.27.241 - 172.27.27.254 | 172.27.27.255 | Tunnels | Tunneling to router-001.nasqueron.org |

✱ denotes currently a false subnet, containing isolated bare metal servers, not linked to any private network excepted through tunnels, with IP are assigned as /32 (netmask 255.255.255.255 0xffffffff)

172.27.27.0/28

IntraNought, VM hosted on hyper-001

Netmask: 255.255.255.240 / 0xFFFFFFF0

| IP | Server | Reverse DNS | OS | Purpose | AUP |

|---|---|---|---|---|---|

| 172.27.27.1 | router-001 | router-001.nasqueron.drake | FreeBSD 13 | Router | Infrastructure server |

| 172.27.27.2 | Reserved for DNS server | ||||

| 172.27.27.3 | Reserved for mail server | ||||

| 172.27.27.4 | Dwellers | dwellers.nasqueron.drake | Rocky 9 | Docker development server hosting | Open for Docker images building |

| 172.27.27.5 | docker-002 | docker-002.nasqueron.drake | Rocky 9 | Docker engine | Infrastructure server |

| 172.27.27.6 | docker-001 | docker-001.nasqueron.drake | Rocky 9 | Docker engine | Infrastructure server |

| 172.27.27.7 | Complector | complector.nasqueron.drake | FreeBSD 13 | Salt, Vault | Infrastructure server |

| 172.27.27.8 | db-A-001 | db-A-001.nasqueron.drake | FreeBSD 13 | PostgreSQL | Infrastructure server |

| 172.27.27.9 | db-B-001 | db-B-001.nasqueron.drake | FreeBSD 13 | MariaDB | Infrastructure server |

| 172.27.27.10 | web-001 | web-001.nasqueron.drake | FreeBSD 13 | nginx, php-fpm | Infrastructure server |

| 172.27.27.11 | Free | ||||

| 172.27.27.12 | Free | ||||

| 172.27.27.13 | Free | ||||

| 172.27.27.14 | Reserved for temporary work on hyper-001 | ||||

| 172.27.27.15 | db-B VIP | db-B.nasqueron.drake | - | MariaDB | VIP for database cluster db-B' |

VIP denotes a virtual IP to assign to an existing machine, as a load balancer alternative for HA purpose.

172.27.27.16/28

Servers for the production service mesh. Kubernetes.

Netmask could be:

- if you need to target the service mesh for access purpose: 255.255.255.240 / 0xFFFFFFF0

- if you need to address a specific IP of a server: 255.255.255.255 / 0xFFFFFFFF - servers are currently baremetal not linked to any private network ethernet card

| IP | Server | Reverse DNS | OS | Purpose | AUP |

|---|---|---|---|---|---|

| 172.27.27.28 | CloudHugger | cloudhugger.nasqueron.drake | Debian 10 | Kubernetes | Infrastructure server |

172.27.27.32/28

Development and management servers. Work by humans should always be from those servers.

Netmask could be:

- if you need to target the servers humans will use to manage the infrastructure and deploy applications: 255.255.255.240 / 0xFFFFFFF0

- if you need to address a specific IP of a server: 255.255.255.255 / 0xFFFFFFFF - servers are currently baremetal not linked to any private network ethernet card

| IP | Server | Reverse DNS | OS | Purpose | AUP |

|---|---|---|---|---|---|

| 172.27.27.33 | Ysul | ysul.nasqueron.drake | FreeBSD 12.1 | Nasqueron development server | Access for any Nasqueron or Wolfplex project |

| 172.27.27.34 | Free | ||||

| 172.27.27.35 | WindRiver | windriver.nasqueron.drake | FreeBSD 12.1 | Nasqueron development server | Access for any Nasqueron project |

172.27.27.240/28

IP range for tunnels from router-001.nasqueron.org

Netmask: 255.255.255.240 / 0xFFFFFFF0

| IP | Server | Reverse DNS | OS | Purpose | AUP |

|---|---|---|---|---|---|

| 172.27.27.251 | router-001 | - | - | GRE tunnel with Ubald (gre26) | Traffic between Drake 27 and Drake 26 [EXPERIMENTAL] |

| 172.27.27.252 | router-001 | - | - | GRE tunnel with Ysul (gre0) | - |

| 172.27.27.253 | router-001 | - | - | Reserved for tunnel with CloudHugger (gre1?) | - |

| 172.27.27.254 | router-001 | - | - | GRE tunnel with WindRiver (gre2) | - |

DNS entries

| Domain | IP | Description |

|---|---|---|

| k8s.prod.nasqueron.drake | 172.27.27.28 | Advertise address for k8s cluster |

Other network ranges

Kubernetes clusters use the following network ranges:

| Cluster name | IP range | DNS domain | Use |

|---|---|---|---|

| nasqueron-k8s-prod | 10.92.0.0/16 | k8s.prod.nasqueron.local | Kubernetes services |

| nasqueron-k8s-prod-pods | 10.192.0.0/16 | None | Pods for nasqueron-k8s-prod |

Network manual

Build the network

This private network isn't trivial to build as machines are located in different datacenter cabinets, without sharing a common private physical network.

We use the following techniques to recreate those connections:

- On an hypervisor, each VM has a second network card, with a Drake IP assigned

- tunnels using ICANNnet as pipelines allow parts of Drake to be connected, with software like tinc

Configure private network card

In rOPS: pillar/nodes/nodes.sls, define a private_interface block the Drake network information for this machine.

The network unit in the core role should pick it and configure it, rOPS: roles/core/network/private.sls at least for CentOS/RHEL/Rocky and FreeBSD}}.

Tinc

Tinc allows to create a mesh network and bridge the network segments.

In router mode, it only forwards IPv4 and IPv6 traffic. In switch and hub mode, it can broadcast all packets to other daemons, including in switch mode a correct Ethernet bridge with routing directly to MAC addresses.

Routes

Systemd: routes service

On Linux systems, we've two issues:

- network-scripts from RHEL are deprecated, and require we attach routes to a specific network device

- nmstate is a descriptive solution with YAML files but require a lot of dependencies and to rely to NetworkManager (we don't on CentOS/Rocky machines)

As our only requirement for routing on Linux is to call "ip route", we provide a simple tool to read /etc/routes.conf and call ip route replace with the content. The service routes call that tool.

/etc/routes.conf is generated by Salt in the roles/network/routes unit, and is a file with one line = one route, blank lines and comments (lines starting by #) ignored.

Troubleshoot

A non IP packet doesn't pass

If the connection is managed by tinc, ensure it's configured in switch mode: in router mode, it only forwards IPv4 and IPv6 unicast packets. For linux, check this bridging reference guide.

A route is missing

- Linux: ip route add 172.27.27.0/24 via $GW

- FreeBSD: route add -net 172.27.27.0/24 $GW

$GW is:

- 172.27.27.1 for IntraNought (dwellers, docker-001) and Tinc tunnels

- the tunnel IP, for example 172.27.27.27 for GRE tunnels

Perhaps we could make the use of 172.27.27.1 standard with an intermediate static route. For example for Ysul

route add -net 172.27.27.1/32 172.27.27.252 route add -net 172.27.27.0/24 172.27.27.1

Routes are OK but packets still don't pass

- Check on router-001

sysctl net.inet.ip.forwarding, it must be 1 - To link server A and B you need ingoing and outgoing routes A->router->B and B->router->A, that's FOUR of them to check